Last month, The New York Times “reviewed” the still-in-the-works Participant Media Index designed to measure the impact and engagement of social issue documentaries. Anyone in the nonprofit world knows that “impact” and “engagement” are the buzzwords du jour.

But more than a passing fad, impact evaluation is serious business — one that many of us in the social change realm grapple with every day. This has not always been the case in the 18 years I’ve worked in the sector. Funders have increasingly driven the trend, asking grantees to not just monitor our progress, but also develop innovative ways to quantify that progress and share our learnings more broadly. In this way the nonprofit world is catching up with the fields of medicine, psychology and education — all of which have embraced “evidence-based practice” over the past two decades.

But more than a passing fad, impact evaluation is serious business — one that many of us in the social change realm grapple with every day. This has not always been the case in the 18 years I’ve worked in the sector. Funders have increasingly driven the trend, asking grantees to not just monitor our progress, but also develop innovative ways to quantify that progress and share our learnings more broadly. In this way the nonprofit world is catching up with the fields of medicine, psychology and education — all of which have embraced “evidence-based practice” over the past two decades.

This is mostly a positive development. By laying out concrete objectives and outcomes at the start of a grant (in the proposal), organizations are forced to think more strategically and are held accountable for delivering on their promises. The most forward-thinking funders understand the risk inherent in our work — that social investments, like those in business, are not guaranteed to succeed, and that organizations can learn as much from their failures as their achievements. Yet careful planning (yes, even the ubiquitous Logic Framework) can help increase the odds that we uphold our end of the bargain: To ensure that precious resources successfully mobilize positive social change.

WITNESS has always been considered an innovator in impact evaluation, starting in the mid-2000s with our groundbreaking Performance Evaluation Dashboard, used as a model by other nonprofits, and evolving into a massive new effort we have launched to overhaul our program. We are constantly looking for new ways to ensure we maximize our performance and learnings. But this approach is not without its challenges. Human rights advocacy is notoriously difficult to measure; change is often incremental and ultimate “wins” can take years to achieve. Video advocacy is even more complex, since video is a complementary tool, intended to corroborate other, more traditional forms of documentation.

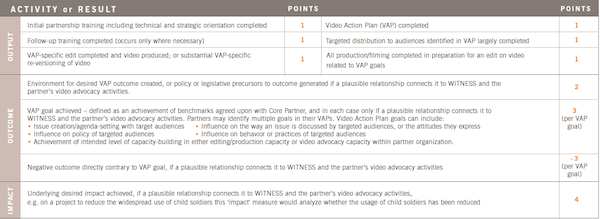

A point system for tracking Outputs, Outcomes and Impacts from WITNESS’ first Performance Dashboard covering our fiscal year 2006

All this we have known for years. But these days, when millions around the world are turning to video, it can feel like a herculean task to measure WITNESS’ ultimate objective: To ensure that those millions with cameras in their hands can document human rights abuse safely and effectively and help secure justice. In our quest to build a global ecosystem of video-for-change users, we must find new ways to scale up our sharing of skills and resources and harness the power of networks to reach exponentially more people. We must also establish constant feedback loops so we can listen and respond to real needs on the ground and support what our grassroots partners identify as their ultimate endpoint results.

But this leveraging of human capital and the resulting changes in behavior, policies and practices do not lend themselves to easy (or, necessarily, short-term) measurement. Few organizations have the resources to invest in longitudinal follow-up studies that could last years. Furthermore, in WITNESS’ case, when clear impact results are achieved, it is simply not in our DNA to claim sole ownership over what is always a collaborative and shared process.

It’s becoming clear that a rapidly changing technology landscape requires human rights organizations to adapt and become more nimble. We would argue that funders also need to adapt their approaches. Many are still asking for outdated ways of measuring impact — with an overemphasis on numbers, for instance, or in holding grantees accountable to rigid and predictive multi-year outcomes (a potential pitfall of Logic Frameworks). This article from the Stanford Social Innovation Review on “emergent strategy” discusses the new trend toward balancing clear goal setting with allowance for iteration and responsiveness along the way — particularly within complex social change work like human rights. It says:

To solve today’s complex social problems, foundations need to shift from the prevailing model of strategic philanthropy that attempts to predict outcomes to an emergent model that better fits the realities of creating social change in a complex world.

This fascinating blog post by Iain Levine of Human Rights Watch captures the essence of this new perspective, including examples (e.g., in Syria) where success is elusive, yet the moral imperative of human rights engagement perhaps overrides ultimate outcomes.

So given the new landscape, and the new thinking around how to best evaluate social change work, what are we doing differently these days at WITNESS? For starters, we have begun to build new evaluation mechanisms into our workflow, constantly collecting both quantitative and anecdotal data from the field. This post on the reach of our Forced Evictions Toolkit is one example of the ways we are aggregating and interpreting information.

We are also capturing the stories that make video such a powerful tool for change. In Syria, for instance, we provided Waseem, a 25-year-old student from Homs, with video equipment and a series of in-depth trainings online that enabled him to grow into a seasoned citizen journalist. Today, Waseem’s videos are featured on major media outlets covering the crisis in Syria and he trains other local activists with the skills he learned from WITNESS.

Since we cannot possibly track the ongoing impacts of every person who attends a training or downloads our resources online, WITNESS has also begun to work with external consultants on “deep dives” into selected programs that serve as emblematic examples of our approach. We recently completed an analysis of our three-year focus on Forced Evictions (available upon request), undertaken with the Adessium Foundation. Findings like these are intended to feed back into the adaptation of that particular program — always in collaboration with our grassroots partners so we can (in emergent strategy-speak) “co-evolve” our collective approach. Given the cross-pollination among all WITNESS programs, such findings are also intended to strengthen our other core initiatives and, we hope, those of our peers.

We’re happy that the topic of monitoring and evaluation is getting serious consideration from mainstream media as it’s something that social change organizations often toil on behind the scenes. The more we can develop a common understanding — and common standards — across our sector, the more effective, efficient and impactful we can all become.

This article was originally posted on the WITNESS Blog and cross-posted with permission.

Sara Federlein is the Associate Director – Foundations where she has overseen a fourfold increase in institutional giving to WITNESS since she began in 2003.

Featured image courtesy of Creative Commons. Image of Sara Federlein by CJ Isaac for WITNESS.